Level up your business with US.

- Home

- The Role of MLOps in Streamlining AI Software Development and Deployment

The Role of MLOps in Streamlining AI Software Development and Deployment

August 5, 2025 - Blog

The Role of MLOps in Streamlining AI Software Development and Deployment

Artificial intelligence (AI) is transforming industries by enabling businesses to make data-driven decisions, automate complex tasks, and deliver smarter products. However, building, deploying, and maintaining AI systems is a multifaceted challenge. The journey from a machine learning (ML) prototype to a robust AI application in production is fraught with hurdles such as model reproducibility, scalability, collaboration issues, and managing the full lifecycle of models. Enter MLOps—a discipline at the intersection of ML, DevOps, and data engineering—that promises to streamline AI software development and deployment.

What is MLOps?

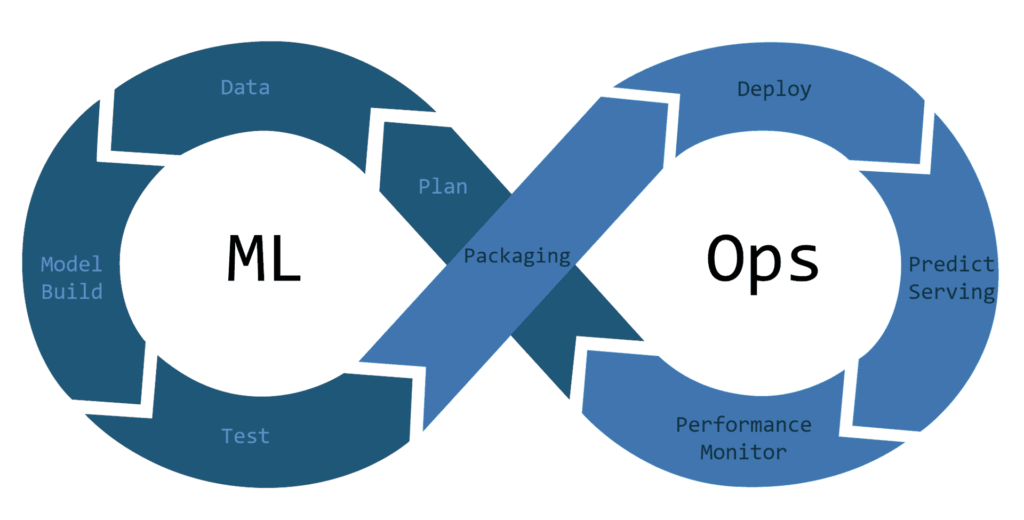

MLOps (Machine Learning Operations) refers to the set of practices and tools that unify ML system development (Dev) and ML system operation (Ops). The core goal is to automate, monitor, and govern all processes, from data collection to deployment, ensuring continuous integration and delivery of reliable, reproducible ML systems.

MLOps extends DevOps practices—like continuous integration (CI), continuous delivery (CD), automation, and monitoring—into the machine learning lifecycle. This includes not only code, but also data, models, and underlying infrastructure.

Key Challenges in AI Software Development

Before MLOps, organizations relied on ad-hoc scripts, manual interventions, and siloed teams. This resulted in challenges such as:

-

Reproducibility: Difficulties in ensuring that experiments and models can be consistently reproduced across different environments.

-

Scalability: Inability to efficiently scale models from small prototypes to production-grade solutions.

-

Collaboration: Data scientists, engineers, and IT teams working in isolation, leading to miscommunication and delayed releases.

-

Monitoring and Governance: Lack of real-time model monitoring, tracking drift, and maintaining compliance.

The MLOps Lifecycle: Streamlining AI Development and Deployment

The MLOps approach introduces a structured lifecycle, breaking down the silos between development, data science, and operations. Here’s how MLOps streamlines modern AI workflows:

-

Data Management & Versioning

-

MLOps tools track datasets used for model training, ensuring that every version of data is catalogued and available for future audits.

-

This enables reproducibility, as each model is associated with the exact dataset versions used during development.

-

-

Automated Model Training & Experiment Tracking

-

Frameworks like MLflow and Kubeflow automate workflows for running experiments, hyperparameter tuning, and tracking metrics.

-

All code, data, models, and experiments are versioned, so teams can retrace steps and understand which configurations led to the best results.

-

-

Model Validation & Continuous Integration

-

Automated testing ensures models behave as expected before moving forward.

-

Integration tests check both model performance and compatibility with existing systems.

-

-

Model Deployment & Continuous Delivery

-

Reproducible deployment pipelines (using CI/CD principles) enable seamless transition of models from staging to production.

-

Rollback and roll-forward mechanisms ensure that issues can be quickly addressed without service downtime.

-

-

Monitoring, Logging & Lifecycle Management

-

MLOps platforms monitor models in production for drift, performance degradation, and anomalies.

-

Automated alerts and canary releases ensure only high-performing models serve customers.

-

-

Collaboration & Governance

-

Centralized dashboards and documentation foster better collaboration among data scientists, developers, and stakeholders.

-

Compliance and audit trails are automatically generated.

-

Benefits of Adopting MLOps for AI Software Development

Adopting MLOps delivers tangible results across teams and organizations:

-

Faster Time to Market: Automating the ML pipeline reduces manual bottlenecks, accelerating deployment cycles.

-

Improved Model Quality: Automated validation, testing, and monitoring yield more robust models.

-

Scalable Operations: Infrastructure as code and containerization allow scalable model deployment even across different cloud environments.

-

Enhanced Collaboration: Shared repositories, automated workflows, and standard processes align teams towards a common goal.

-

Cost Efficiency: Automated resource management and model monitoring reduce operational overhead and cloud spend.

How Code-Driven Labs Enable MLOps

One of the most significant advancements powering modern MLOps is the rise of code-driven labs. These are development environments where data science experimentation, model development, and ML engineering all happen through code—enabling automation, reproducibility, and seamless handoff from experimentation to production.

Key features and impacts of code-driven labs in MLOps:

-

Consistent Environments: All experiments and pipelines are codified as scripts or notebooks, ensuring that the environment is defined and consistent across development and production.

-

Version Control: Leveraging tools like Git, code-driven labs facilitate versioning of code, models, configuration, and data, making it easy to revert changes, track progress, and reproduce experiments.

-

Experiment Tracking: Metadata from every run—datasets, model parameters, hyperparameters, and outcomes—is automatically logged, allowing teams to compare experiments and select the optimal model.

-

Automated Pipelines: Code-driven labs enable the creation of automated pipelines for data preprocessing, model training, and evaluation. These can be triggered by code commits or scheduled jobs, aligning with CI/CD practices.

-

Reusable Components: By modularizing code and functionalities, teams can reuse components across projects, enhancing productivity and maintaining high standards.

-

Seamless Handoff: Once a model is ready, its entire lineage—code, data, metrics, artifacts—moves through the pipeline to deployment, minimizing friction between data science and operations.

In summary, code-driven labs operationalize best practices in MLOps by turning what was once a manual, error-prone process into a scalable, automatable system. They provide a bridge between rapid experimentation and reliable model deployment.

Bringing It All Together: Real-World Impact

Let’s consider a practical scenario: an online retailer wants to deploy a recommendation system powered by AI. Traditionally, data scientists might prototype a model using sample data on one machine, hand off scripts to engineers for productionization, and rely on manual integration scripts for deployment. Any changes require extensive coordination and manual rework.

With an MLOps approach augmented by code-driven labs:

-

Data scientists write code that is integrated, versioned, and executed in scalable environments, reducing inconsistencies.

-

Pipelines automatically retrain the model whenever new data arrives or code is updated, using the exact versions and parameters as in previous experiments.

-

Model serving is automated and monitored continuously, with A/B testing frameworks in place to evaluate performance in real time.

-

If the model starts to drift, alerts are generated, and rollback or retraining happens via automated workflows.

This not only accelerates the release of new AI features, but it also ensures quality, compliance, and scalability at every stage of the AI lifecycle.

Conclusion

MLOps is transforming AI software development and deployment by embedding DevOps principles into the machine learning lifecycle. It eliminates silos, enhances collaboration, ensures reproducibility, and automates the end-to-end delivery pipeline. Code-driven labs play a crucial role by standardizing environments, capturing all experiment metadata, and enabling smooth transitions from development to production.

Organizations embracing MLOps and code-driven labs are positioned to outpace competitors—deploying robust, scalable, and compliant AI solutions with velocity and confidence. By creating a culture of automation, transparency, and continuous improvement, MLOps turns AI ambition into actionable, operational reality.